21 Apr 2022

21 Apr 2022

Our group created the 5 degree of freedom robotic arm using dynamixel servos and 3D printing components designed through fusion 360. In order to accomplish object detection, we used transfer learning on a pretrained YOLOv5 nano SSD model. We also implemented and tested multiple segmentation methods for determining the gripper pose. All of the software was designed and implemented within ROS (Robotic Operating System) as a middleware.

Results

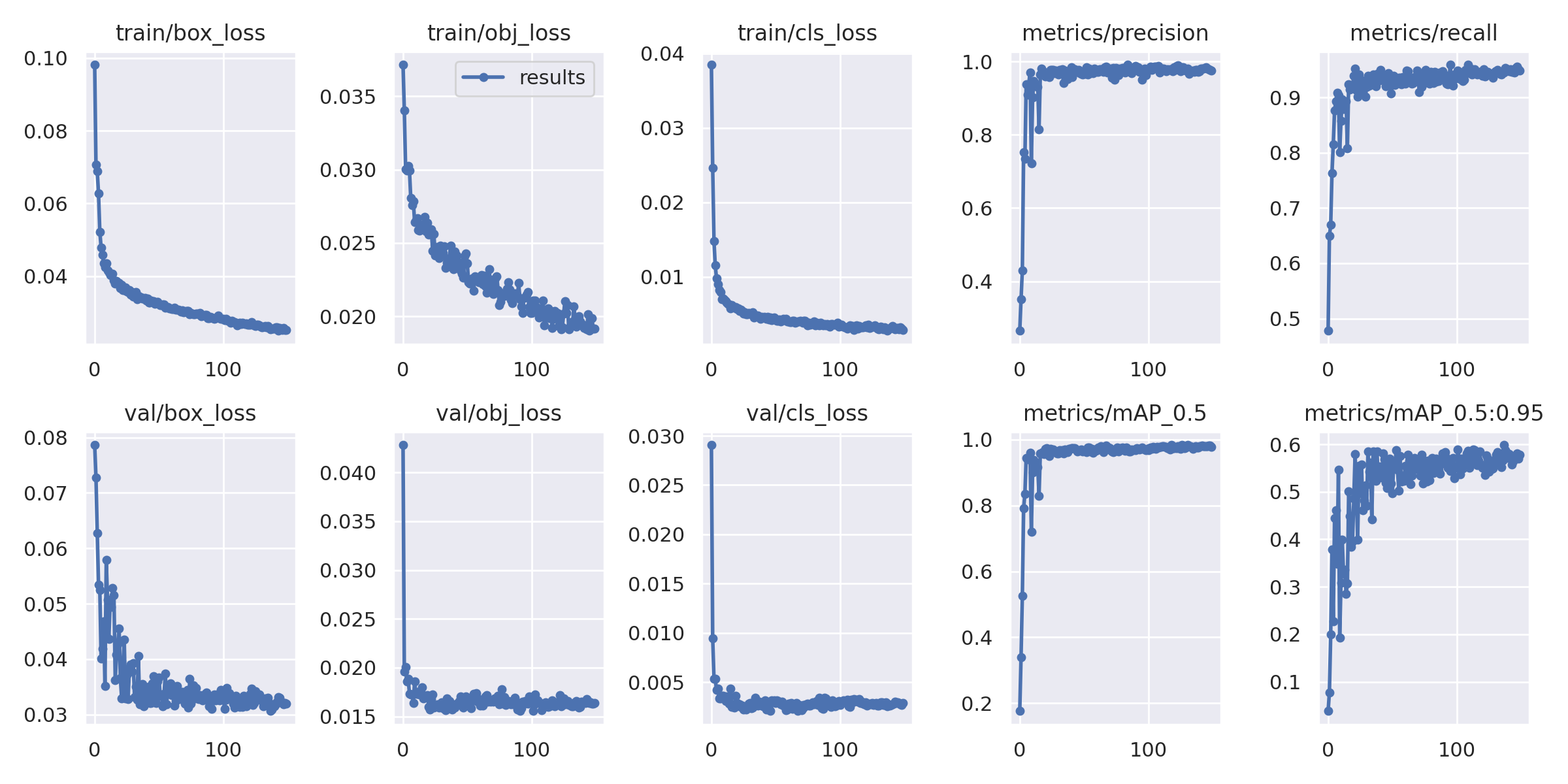

Training plots obtained from training the YOLOv5 neural network on the custom dataset.

Training plots obtained from training the YOLOv5 neural network on the custom dataset.

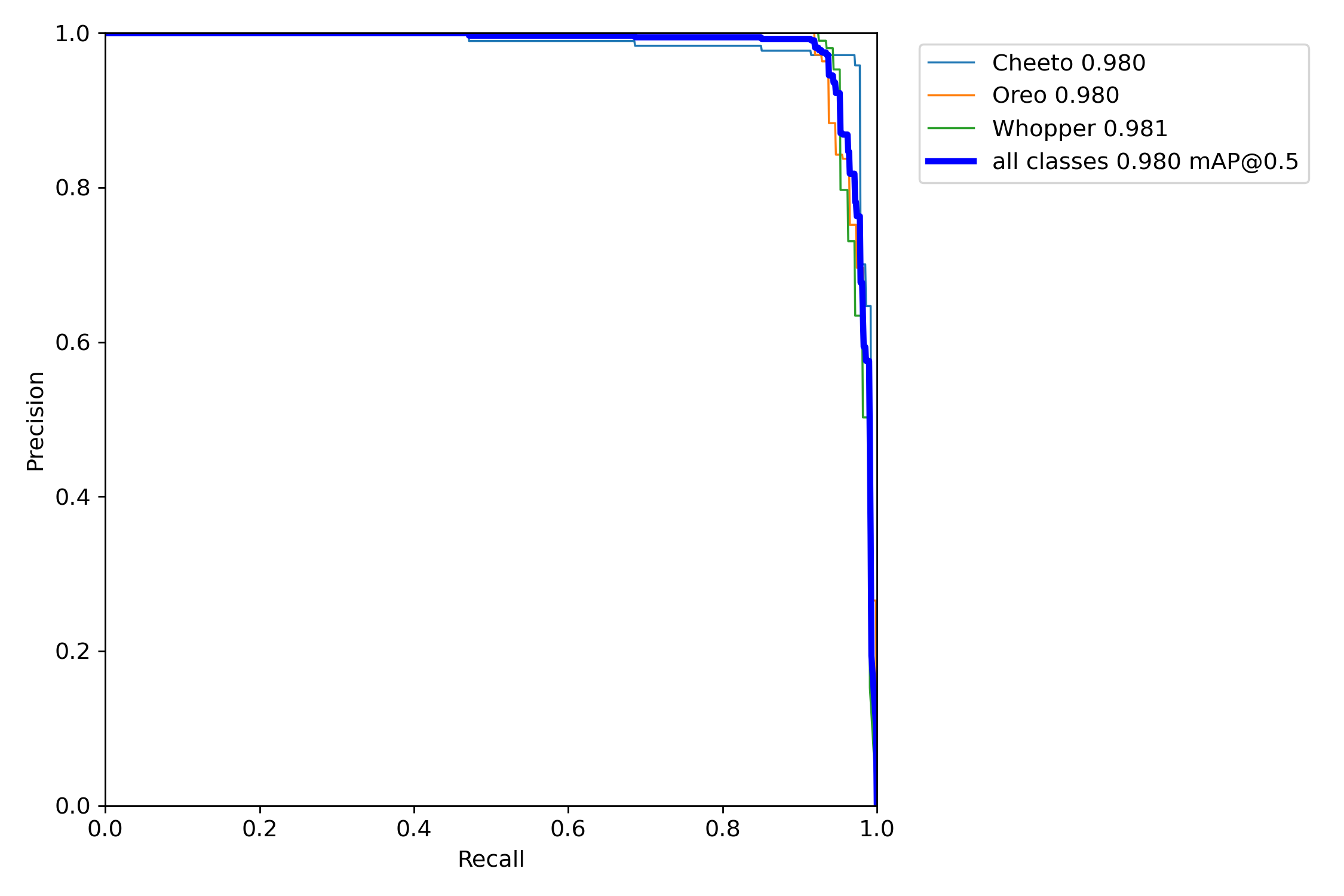

The area under the precision-recall curve (AUPRC) graph under an IoU threshold of 0.5 for each class in the dataset and their mean.

Segmentation Results

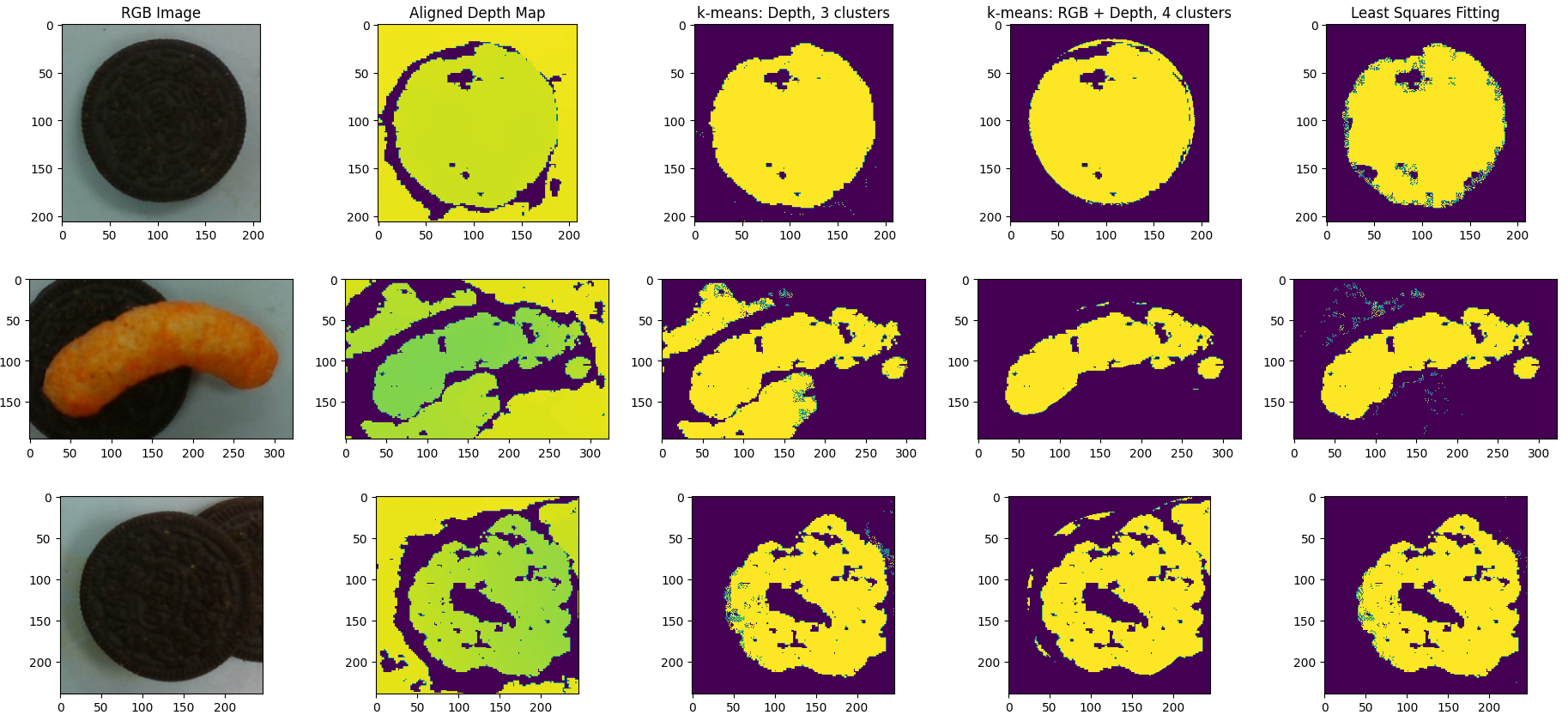

Three test cases. From left to right, RGB image, aligned depth map, k-means on Depth map with 3 clusters, k-means on RGB and depth channels with 4 clusters, least squares fitting of a plane.

Three test cases. From left to right, RGB image, aligned depth map, k-means on Depth map with 3 clusters, k-means on RGB and depth channels with 4 clusters, least squares fitting of a plane.

state_machine

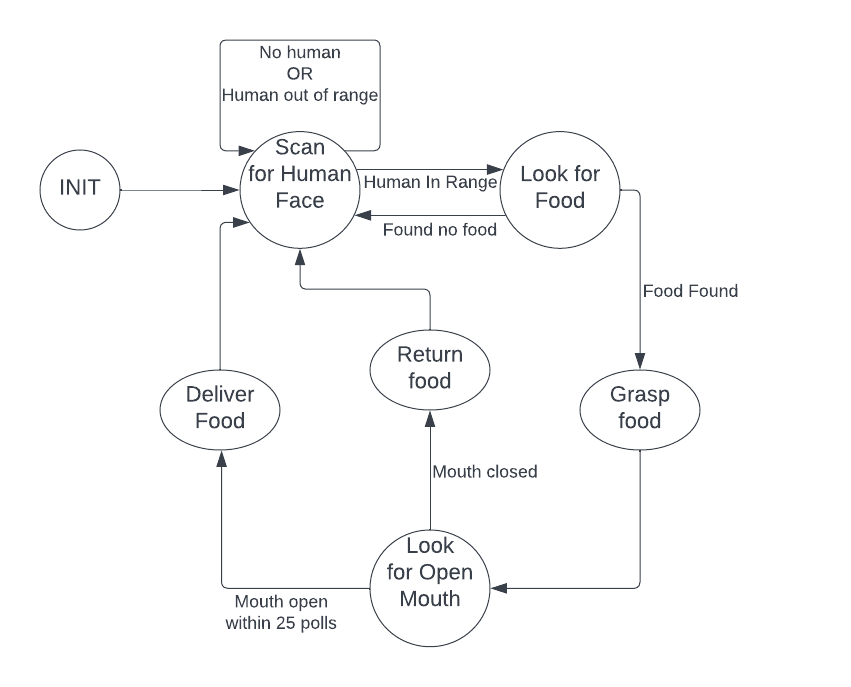

High level state machine of SNACBot’s workflow

High level state machine of SNACBot’s workflow